malbec

TPF Noob!

- Joined

- Sep 29, 2009

- Messages

- 7

- Reaction score

- 0

- Can others edit my Photos

- Photos NOT OK to edit

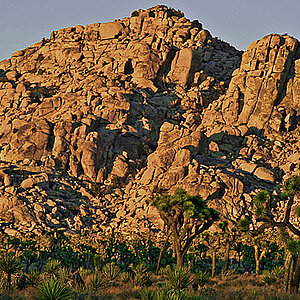

i was asked in a photography workshop...considering the luminance histogram, what does it imply about the dynamic range of scene?

i answered that the histogram is the average of the rgb channels. it implies the dynamic range of scene allows images to represent more accurately the wide range of intensity levels found in the scenes.

does that sound about right?

i answered that the histogram is the average of the rgb channels. it implies the dynamic range of scene allows images to represent more accurately the wide range of intensity levels found in the scenes.

does that sound about right?