It is indeed confusing. Let's use an example. Let's say you shoot a scene of a forest. There's a rock in the scene that is in shadow and is the darkest part of the scene. There are some leaves that are the brightest part. Each area has an actual luminance in the real world. Let's say for sake of simplicity:

Rock = 10 photons per pixel it takes up on your sensor per second.

Leaves = 160 photons per pixel it takes up on your sensor per second.

The dynamic range of this scene is 4 stops (160 / 10 = 16 = 2^4).

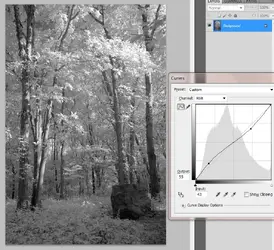

Let's say your camera is capable of capturing up to 4 stops of dynamic range. Any scene with more than that will have clipped shadows and/or highlights no matter how well you choose your exposure. Anything = or less than that will show all detail with no clipping. You can take this image without HDR, and capture all the detail. Like this:

The above image, in the situation described, has a dynamic range of 4 stops.

Now let's say the lighting conditions in the actual forest were different. Let's say it was like this:

Rock = 20 photons per pixel it takes up on your sensor per second.

Leaves = 1280 photons per pixel it takes up on your sensor per second.

The dynamic range of this scene is 6 stops (1280 / 20 = 64 = 2^6).

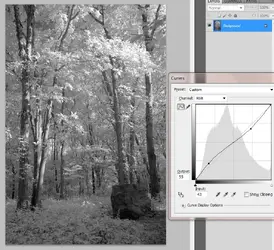

Now you need HDR if you want to capture all the detail in the scene with your 4 stop camera. A center-metered (what your camera will tell you to do) shot would give you the middle 4 stops, and clip one on each side. That would look bad. It would look like this:

This is still a 4 stop dynamic range image, but the ends are clipped now, and you lost the detail in leaves and rock, because the dynamic range of the scene was 6 and you only captured a portion of it this time.

So instead you take one photo at -1 stop, and one photo at +1 stop. Giving you 2 stops overlapping and two at each end covered by one shot each. You plug this into software that gives you basically just a photo that looks like you used a camera.

+1 capture (4 stop dynamic range image):

-1 capture (4 stop dynamic range image):

Output of software:

This is exactly the same final image, but it has a dynamic range of 6 stops now, versus 4. Because the rock in the actual forest put out 6 stops less light than the brightest leaves, and you captured that range precisely in both scenarios.

So the final image (and its contrast) are identical, but the dynamic ranges aren't, and one required HDR techniques, and one didn't. Just had to do with how harsh the light difference was in the forest.

Now, on the other hand, you can change the contrast without changing dynamic range. For instance, using the curves tool like so:

Notice that the tool is only rearranging the middle lightness values. It isn't touching the blackest blacks or whitest whites (those fall on the original line of the starting image). Since dynamic range is defined entirely by the endpoints of the range, dynamic range is EQUAL here in this lower contrast image as it is in the image above it.

Dynamic range of the image here is defined as on the ratio of different rates of photons from the darkest and lightest areas

IN THE ACTUAL FOREST from the most extreme items that your image didn't clip.

Simply by looking at the final image, though, you don't know if the actual forest had a low range, and it was a single shot, or if it had a wide range, but the photographer compensated with multiple captures to still get the full range.

High dynamic range is defined as doing things (like multiple captures) that extend the dynamic range of your images beyond what your equipment can "normally" / natively produce (i.e. with a single capture in the case of photography). So the 6 dynamic range image scenario with a 4 dynamic range camera = HDR. The identical 4 dynamic range image with a 4 dynamic range camera = not HDR.