KC1

No longer a newbie, moving up!

- Joined

- May 5, 2016

- Messages

- 295

- Reaction score

- 53

- Can others edit my Photos

- Photos NOT OK to edit

While it's nothing new, and almost everyone knows it today it's still interesting to ponder. I grew up with film, as that was all that existed for most of my life. I have several digital and film cameras and almost always use digital because it is so easy to do. The convenience is what has sold me.

Food for thought:

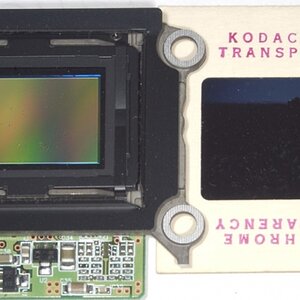

Modern digital cameras use only 1/3 of the photons that reach the sensor, the other

2/3 of the digital image is interpolated by the processor in the conversion from RAW to JPG or TIF.

The image you produce is not the image you see, but a computer rendered version, there is no longer any such thing as a virgin, un-manipulated image as all images are 2/3 computer interpolation. SOOC means straight out of computer in today's digital world.

Time Magazine Article

Food for thought:

Modern digital cameras use only 1/3 of the photons that reach the sensor, the other

2/3 of the digital image is interpolated by the processor in the conversion from RAW to JPG or TIF.

The image you produce is not the image you see, but a computer rendered version, there is no longer any such thing as a virgin, un-manipulated image as all images are 2/3 computer interpolation. SOOC means straight out of computer in today's digital world.

Time Magazine Article

![[No title]](/data/xfmg/thumbnail/37/37536-3578b4f283f738d862be62d896fa52d5.jpg?1619738132)

![[No title]](/data/xfmg/thumbnail/37/37538-d4704bfd4f0e4b1941649d81ff8edf2c.jpg?1619738133)