spacediver

TPF Noob!

Hope this is an appropriate place to post this. If not, please advise on where I should post this.

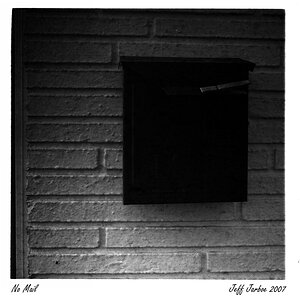

Nutshell version: Load up these two images on your computer display, and take a photo of your display. Try to render the photo so that it matches the original photo as closely as possible. Use whatever method you prefer.

First image

Second image

Here is a test pattern that may help you calibrate your exposure for optimizing dynamic range. You can probably use this for getting the white balance correct.

Long version:

I'm quite new to photography, and have been exploring it through a very technical route. I've been using my Canon EOS 450D for scientific imaging of CRT displays. One of the things I've been doing is accurately reproducing colors, by turning my camera into a colorimeter that is highly accurate for my display.

A fun way to test this is to take a photo of a nice wallpaper on your monitor, and try to render it so that it looks close to how the original looked on your screen. Comparing the images also gives a unique insight into the quality of image reproduction - you can literally look at the original image and the photo, side by side on the same screen.

Here's a breakdown of my process:

First, I used a colorimeter (X-Rite i1Display Pro) to measure the XYZ values of the three primaries of my display. I then took a RAW image of each primary, and using Matlab, I measured the average value of each of the three channels in the RAW image (each of which corresponds to a different filter on the color filter array overlaying the sensor). Using this information, I created a matrix that converts values from the RAW image into XYZ values.

I then determined which shutter speed would make best use of the camera's dynamic range, relative to the dynamic range of my display. I used a test pattern on my display that contained 16 bars ranging from black to peak white. This also allowed me to calculate the normalizing factor for the Y value (which represents relative luminance).

I then chose two pretty images, and took RAW photos of them. Using the cameraRGB to XYZ matrix I had created earlier, I subsampled the RAW image, and transformed each 2x2 square of of sensel data into a single X value, a single Y value, and a single Z value. I then transformed these values into linear sRGB values, and then into gamma corrected sRGB values (using 2.4 as the exponent as this is what my display is calibrated at). Note that this approach is different from conventional demosaicing algorithms: those attempt to preserve the full sensel resolution, whereas my approach trades half the resolution for an increase in image accuracy.

One thing I may also experiment with is implementing some dithering to preserve as much of the original 14 bit data as I can. But for now, it works fairly well.

Given that my display is fairly well calibrated to sRGB standards, the original image and my photo should look similar on any display. For what it's worth, the original images quite a bit superior than my photos, when the original is viewed at full size. Also, my display is a Sony GDM FW900.

Here are the original images and the photos of them. I've resized them for viewability on this forum

Nutshell version: Load up these two images on your computer display, and take a photo of your display. Try to render the photo so that it matches the original photo as closely as possible. Use whatever method you prefer.

First image

Second image

Here is a test pattern that may help you calibrate your exposure for optimizing dynamic range. You can probably use this for getting the white balance correct.

Long version:

I'm quite new to photography, and have been exploring it through a very technical route. I've been using my Canon EOS 450D for scientific imaging of CRT displays. One of the things I've been doing is accurately reproducing colors, by turning my camera into a colorimeter that is highly accurate for my display.

A fun way to test this is to take a photo of a nice wallpaper on your monitor, and try to render it so that it looks close to how the original looked on your screen. Comparing the images also gives a unique insight into the quality of image reproduction - you can literally look at the original image and the photo, side by side on the same screen.

Here's a breakdown of my process:

First, I used a colorimeter (X-Rite i1Display Pro) to measure the XYZ values of the three primaries of my display. I then took a RAW image of each primary, and using Matlab, I measured the average value of each of the three channels in the RAW image (each of which corresponds to a different filter on the color filter array overlaying the sensor). Using this information, I created a matrix that converts values from the RAW image into XYZ values.

I then determined which shutter speed would make best use of the camera's dynamic range, relative to the dynamic range of my display. I used a test pattern on my display that contained 16 bars ranging from black to peak white. This also allowed me to calculate the normalizing factor for the Y value (which represents relative luminance).

I then chose two pretty images, and took RAW photos of them. Using the cameraRGB to XYZ matrix I had created earlier, I subsampled the RAW image, and transformed each 2x2 square of of sensel data into a single X value, a single Y value, and a single Z value. I then transformed these values into linear sRGB values, and then into gamma corrected sRGB values (using 2.4 as the exponent as this is what my display is calibrated at). Note that this approach is different from conventional demosaicing algorithms: those attempt to preserve the full sensel resolution, whereas my approach trades half the resolution for an increase in image accuracy.

One thing I may also experiment with is implementing some dithering to preserve as much of the original 14 bit data as I can. But for now, it works fairly well.

Given that my display is fairly well calibrated to sRGB standards, the original image and my photo should look similar on any display. For what it's worth, the original images quite a bit superior than my photos, when the original is viewed at full size. Also, my display is a Sony GDM FW900.

Here are the original images and the photos of them. I've resized them for viewability on this forum

![[No title]](/data/xfmg/thumbnail/38/38261-db20f6f92ee8f0d4c5cf1536e308638b.jpg?1619738546)

![[No title]](/data/xfmg/thumbnail/30/30886-4d4f2b370f36c175a23901cc8689aea4.jpg?1619734498)