Gavjenks

TPF Noob!

- Joined

- May 9, 2013

- Messages

- 2,976

- Reaction score

- 588

- Location

- Iowa City, IA

- Can others edit my Photos

- Photos OK to edit

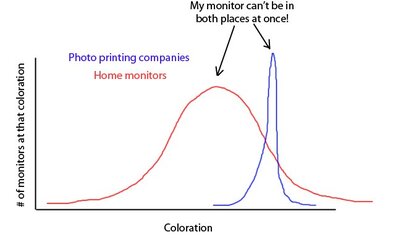

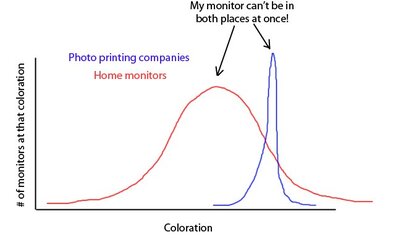

And what I'm getting from people in this thread so far is that nobody has ever heard of such a thing as monitor makers attempting to make monitors to any particular standard, certainly not to the printer's photo standards. So almost certainly, then, the reality is something like this:

(The blue is probably even closer to a single line with almost no variation)

And there is no one solution. If I want the maximum number of people to see my work as I see it, I have to have one monitor at each calibration, or software that flips my monitor back and forth. And then edit the photo based on where I intend to publish it, in paper or online, using whichever calibration matches.

Assuming I give a crap enough to bother. Which I DO, because it sounds like a fun project in and of itself =D

(The blue is probably even closer to a single line with almost no variation)

And there is no one solution. If I want the maximum number of people to see my work as I see it, I have to have one monitor at each calibration, or software that flips my monitor back and forth. And then edit the photo based on where I intend to publish it, in paper or online, using whichever calibration matches.

Assuming I give a crap enough to bother. Which I DO, because it sounds like a fun project in and of itself =D

![[No title]](/data/xfmg/thumbnail/37/37091-18fa97e6ac84c47479921254caf164c3.jpg?1619737881)

![[No title]](/data/xfmg/thumbnail/32/32808-9d1f657a1903d3bdbd67ea830397d62c.jpg?1619735668)

![[No title]](/data/xfmg/thumbnail/32/32806-e16129723fd659a65a21d27ec96c2637.jpg?1619735667)